Feature Selection

A feature selection case¶

We use the Pima Indians Diabetes dataset from Kaggle.

The dataset corresponds to classification tasks on which you need to predict if a person has diabetes based on 8 features.

# Loading the primary modules

import pandas as pd

import numpy as np

# Loading the data

dataframe = pd.read_csv("data/diabetes.csv")

# Alternative way

#url = "https://raw.githubusercontent.com/jbrownlee/Datasets/master/pima-indians-diabetes.data.csv"

#names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class']

#dataframe = pd.read_csv(url, names=names)

dataframe.head(3)

| Pregnancies | Glucose | BloodPressure | SkinThickness | Insulin | BMI | DiabetesPedigreeFunction | Age | Outcome | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 6 | 148 | 72 | 35 | 0 | 33.6 | 0.627 | 50 | 1 |

| 1 | 1 | 85 | 66 | 29 | 0 | 26.6 | 0.351 | 31 | 0 |

| 2 | 8 | 183 | 64 | 0 | 0 | 23.3 | 0.672 | 32 | 1 |

# Renaming the features, fields or columns AND the response, dependent variable

names = ['preg', 'plas', 'pres', 'skin', 'test', 'mass', 'pedi', 'age', 'class']

dataframe.columns = names

dataframe.head(3)

| preg | plas | pres | skin | test | mass | pedi | age | class | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 6 | 148 | 72 | 35 | 0 | 33.6 | 0.627 | 50 | 1 |

| 1 | 1 | 85 | 66 | 29 | 0 | 26.6 | 0.351 | 31 | 0 |

| 2 | 8 | 183 | 64 | 0 | 0 | 23.3 | 0.672 | 32 | 1 |

# Keeping the values only

# Converting the DataFrame object to a Numpy ndarray

# to achieve faster computation

array = dataframe.values

# Segregating the data into separate variables

# Features and the labels are separated

# Features, col 0 to 7

X = array[:,0:8]

# Response, col 8

Y = array[:,8]

Filter feature selection techniques¶

Let’s implement a chi-squared statistical test for non-negative features to select 4 of the best features from the dataset; from the scikit-learn module.

Other “Correlation” techniques: Pearsons’ correlation, LDA, and ANOVA.

A word on the chi-squared test

The chi-squared test is used to determine whether there is a significant difference between the expected frequencies or proportions or distribution and the observed frequencies or proportions or distribution in one or more categories.

It is used to compared the variance of categories or samples vs. population.

It test if each category is mutually exclusive or statistically independent from the other categories.

If the categories are independent, there are not “correlated”.

The null hypothesis: mutually exclusive or statistically independent. When we reject the null hypothesis, we conclude to statistical dependence or homogeneity.

# Importing the necessary modules

# SelectKBest class can be used with a suite of different statistical tests

# to select a specific number of features

from sklearn.feature_selection import SelectKBest

from sklearn.feature_selection import chi2

# Feature extraction

test = SelectKBest(score_func=chi2, k=4)

fit = test.fit(X, Y)

# Summarizing scores

np.set_printoptions(precision=3)

print(fit.scores_)

1 2 | |

# Alternative view

pd.DataFrame({'names': names[0:8], 'scores': fit.scores_})

| names | scores | |

|---|---|---|

| 0 | preg | 111.519691 |

| 1 | plas | 1411.887041 |

| 2 | pres | 17.605373 |

| 3 | skin | 53.108040 |

| 4 | test | 2175.565273 |

| 5 | mass | 127.669343 |

| 6 | pedi | 5.392682 |

| 7 | age | 181.303689 |

You can see the scores for each attribute and the 4 attributes chosen (those with the highest scores): plas, test, mass, age.

This scores will help you further in determining the best features for training your model.

features = fit.transform(X)

# Summarizing selected features (plas, test, mass, age)

features[0:5,:]

1 2 3 4 5 | |

# Alternative view

pd.DataFrame(features[0:5,:], columns=['plas','test','mass','age'])

| plas | test | mass | age | |

|---|---|---|---|---|

| 0 | 148.0 | 0.0 | 33.6 | 50.0 |

| 1 | 85.0 | 0.0 | 26.6 | 31.0 |

| 2 | 183.0 | 0.0 | 23.3 | 32.0 |

| 3 | 89.0 | 94.0 | 28.1 | 21.0 |

| 4 | 137.0 | 168.0 | 43.1 | 33.0 |

# Original dataset

dataframe.head(5)

| preg | plas | pres | skin | test | mass | pedi | age | class | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 6 | 148 | 72 | 35 | 0 | 33.6 | 0.627 | 50 | 1 |

| 1 | 1 | 85 | 66 | 29 | 0 | 26.6 | 0.351 | 31 | 0 |

| 2 | 8 | 183 | 64 | 0 | 0 | 23.3 | 0.672 | 32 | 1 |

| 3 | 1 | 89 | 66 | 23 | 94 | 28.1 | 0.167 | 21 | 0 |

| 4 | 0 | 137 | 40 | 35 | 168 | 43.1 | 2.288 | 33 | 1 |

In R¶

The caret package provides tools to automatically report on the relevance and importance of attributes in your data and even select the most important features.

Data can contain attributes that are highly correlated with each other.

Many methods perform better if highly correlated attributes are removed.

The caret package provides findCorrelation which will analyze a correlation matrix of your data’s attributes report on attributes that can be removed.

Using the Pima Indians Diabetes dataset, let’s remove attributes with an absolute correlation of 0.75 or higher.

# Ensuring the results are repeatable

set.seed(7)

# Loading the packages

library(mlbench)

library(caret)

# Loading the data

data(PimaIndiansDiabetes)

# Calculating the correlation matrix

correlationMatrix <- cor(PimaIndiansDiabetes[,1:8])

# Summarizing the correlation matrix

print(correlationMatrix)

# Finding attributes that are highly corrected (ideally >0.75)

highlyCorrelated <- findCorrelation(correlationMatrix, cutoff=0.5)

# Printing the indexes of highly correlated attributes

print(highlyCorrelated)r

The importance of features can be estimated from data by building a model.

Some methods like decision trees have a built-in mechanism to report on variable importance.

For other algorithms, the importance can be estimated using a ROC curve analysis conducted for each attribute.

Let’s constructs a Learning Vector Quantization (LVQ) model.

# Ensuring the results are repeatable

set.seed(7)

# Loading the packages

library(mlbench)

library(caret)

# Loading the data

data(PimaIndiansDiabetes)

# Calculating the correlation matrix

correlationMatrix <- cor(PimaIndiansDiabetes[,1:8])

# Summarizing the correlation matrix

print(correlationMatrix)

# Finding attributes that are highly corrected (ideally > 0.75)

highlyCorrelated <- findCorrelation(correlationMatrix, cutoff=0.5)

# Printing indexes of highly correlated attributes

print(highlyCorrelated)

Wrapper feature selection techniques¶

Let’s implement a Recursive Feature Elimination from the scikit-learn module.

Other techniques: Forward Selection, Backward Elimination, and Combination of forward selection and backward elimination.

The Recursive Feature Elimination (or RFE) works by recursively removing attributes and building a model on those attributes that remain.

It uses the model accuracy to identify which attributes (and a combination of attributes) contribute the most to predicting the target attribute.

You use RFE with the Logistic Regression classifier to select the top 3 features.

The choice of algorithms does not matter too much as long as it is consistent.

# Importing your necessary modules

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

# Feature extraction

model = LogisticRegression()

rfe = RFE(model, 3)

fit = rfe.fit(X, Y)

# Summarizing the selection of the attributes

print("Num Features: %s" % (fit.n_features_))

print("Selected Features: %s" % (fit.support_))

print("Feature Ranking: %s" % (fit.ranking_))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 | |

# Alternative view

pd.DataFrame({'names': names[0:8], 'selected': fit.support_, 'ranking': fit.ranking_})

| names | ranking | selected | |

|---|---|---|---|

| 0 | preg | 1 | True |

| 1 | plas | 2 | False |

| 2 | pres | 3 | False |

| 3 | skin | 5 | False |

| 4 | test | 6 | False |

| 5 | mass | 1 | True |

| 6 | pedi | 1 | True |

| 7 | age | 4 | False |

You can see that RFE chose the top 3 features as preg, mass, pedi.

These are marked True in the support array and marked with a choice “1” in the ranking array.

You can also use RFE with the Bagged decision trees like Random Forest and Extra Trees to estimate the importance of features.

# Feature extraction

model = ExtraTreesClassifier()

fit = model.fit(X, Y)

# Summarizing the selection of the attributes

print(fit.feature_importances_)

1 2 3 4 5 6 | |

print("Num Features: %s" % (fit.n_features_))

print("Features Importance: %s" % fit.feature_importances_)

1 2 3 | |

# Alternative view

pd.DataFrame({'names': names[0:8], 'importance': fit.feature_importances_}).\

sort_values(by='importance', ascending=False)

| importance | names | |

|---|---|---|

| 1 | 0.200415 | plas |

| 5 | 0.158448 | mass |

| 7 | 0.152433 | age |

| 6 | 0.122269 | pedi |

| 0 | 0.104908 | preg |

| 2 | 0.095849 | pres |

| 4 | 0.084972 | test |

| 3 | 0.080706 | skin |

Another dataset, another example¶

Let’s tackle another example using the built-in iris dataset, reusing the Logistic Regression and the Extra Tree Ensemble.

# Importing your necessary modules

from sklearn.feature_selection import RFE

from sklearn.linear_model import LogisticRegression

# Loading the iris datasets

from sklearn import datasets

dataset = datasets.load_iris()

names = ['sepal length','sepal width','petal length','petal width']

dataset_df = pd.DataFrame(dataset.data, columns=names)

dataset_df.head(3)

| sepal length | sepal width | petal length | petal width | |

|---|---|---|---|---|

| 0 | 5.1 | 3.5 | 1.4 | 0.2 |

| 1 | 4.9 | 3.0 | 1.4 | 0.2 |

| 2 | 4.7 | 3.2 | 1.3 | 0.2 |

# Creating a base classifier used to evaluate a subset of attributes

model = LogisticRegression()

# Creating the RFE model and select 3 attributes

rfe = RFE(model, 3)

fit = rfe.fit(dataset.data, dataset.target)

1 2 3 4 5 6 7 8 | |

print("Num Features: %s" % (fit.n_features_))

print("Selected Features: %s" % (fit.support_))

print("Feature Ranking: %s" % (fit.ranking_))

1 2 3 | |

# Alternative view

pd.DataFrame({'names': names, 'selected': fit.support_, 'ranking': fit.ranking_})

| names | ranking | selected | |

|---|---|---|---|

| 0 | sepal length | 2 | False |

| 1 | sepal width | 1 | True |

| 2 | petal length | 1 | True |

| 3 | petal width | 1 | True |

Keep top-ranking features (rank 1) and leave out the other features (rank 2).

# Importing your necessary modules

from sklearn import metrics

from sklearn.ensemble import ExtraTreesClassifier

# Creating a base classifier

rfe = ExtraTreesClassifier()

# Creating the RFE model and select 3 attributes

rfe.fit(dataset.data, dataset.target)

fit = rfe.fit(dataset.data, dataset.target)

1 2 3 4 5 | |

print("Num Features: %s" % (fit.n_features_))

print("Features Importance: %s" % rfe.feature_importances_)

1 2 | |

# Alternative view

pd.DataFrame({'names': names, 'importance': rfe.feature_importances_}).\

sort_values(by='importance', ascending=False)

| importance | names | |

|---|---|---|

| 3 | 0.520975 | petal width |

| 2 | 0.373365 | petal length |

| 0 | 0.084710 | sepal length |

| 1 | 0.020951 | sepal width |

The last results confirm the previous results.

In R¶

Recursive Feature Elimination or RFE.

# Ensuring the results are repeatable

set.seed(7)

# Loading the packages

library(mlbench)

library(caret)

# Loading the data

data(PimaIndiansDiabetes)

# Defining the control using a random forest selection function

control <- rfeControl(functions=rfFuncs, method="cv", number=10)

# Running the RFE algorithm

results <- rfe(PimaIndiansDiabetes[,1:8], PimaIndiansDiabetes[,9], sizes=c(1:8), rfeControl=control)

# Summarize the results

print(results)

# Listing the chosen features

predictors(results)

# Plotting the results

plot(results, type=c("g", "o"))

Embedded feature selection techniques¶

Let’s use the Ridge regression from the scikit-learn module; a regularization technique as well.

Other techniques: LASSO and Elastic Net.

Find out more about regularization techniques.

# Importing your necessary module

from sklearn.linear_model import Ridge

# Using Ridge regression to determine the R-squared

ridge = Ridge(alpha=1.0)

ridge.fit(X,Y)

1 2 | |

In order to better understand the results of Ridge regression, you will implement a little helper function that will help you to print the results in a better so that you can interpret them easily.

# Implementing a function for pretty-printing the coefficients

def pretty_print_coefs(coefs, names = None, sort = False):

if names == None:

names = ["X%s" % x for x in range(len(coefs))]

lst = zip(coefs, names)

if sort:

lst = sorted(lst, key = lambda x:-np.abs(x[0]))

return " + ".join("%s * %s" % (round(coef, 3), name)

for coef, name in lst)

# Applying the function

print("Ridge model:", pretty_print_coefs(ridge.coef_))

print('')

# Applying the function with the names

print("Ridge model:", pretty_print_coefs(ridge.coef_, names))

1 2 3 | |

# Applying the function with the names and sorting the results

print("Ridge model:", pretty_print_coefs(ridge.coef_, names, True))

1 | |

You can spot all the coefficient terms appended with the feature variables.

You can pick the most essential features.

The sorted top 3 features are pedi, preg, mass.

A word on the Ridge regression

- It is also known as L2-Regularization.

- For correlated features, it means that they tend to get similar coefficients.

- Feature having negative coefficients don’t contribute that much. But in a more complex scenario where you are dealing with lots of features, then this score will definitely help you in the ultimate feature selection decision-making process.

Takeway¶

The three techniques help to understand the features of a particular dataset in a comprehensive manner.

Feature selection is essentially a part of data preprocessing which is considered to be the most time-consuming part of any machine learning pipeline.

These techniques will help you to approach it in a more systematic way and machine learning friendly way.

You will be able to interpret the features more accurately.

Why Penalize the Magnitude of Coefficients?¶

Given a sine curve (between 60° and 300°) and some random noise using the following code:

# Importing modules

import numpy as np

import pandas as pd

import random

import matplotlib.pyplot as plt

%matplotlib inline

from matplotlib.pylab import rcParams

rcParams['figure.figsize'] = 12, 10

# Defining input array with angles

# from 60deg to 300deg converted to radians

x = np.array([i*np.pi/180 for i in range(60,300,4)])

# Setting seed for reproducability

np.random.seed(10)

y = np.sin(x) + np.random.normal(0,0.15,len(x))

data = pd.DataFrame(np.column_stack([x,y]),columns=['x','y'])

plt.plot(data['x'],data['y'],'.');

Let’s try to estimate the sine function using polynomial regression with powers of x form 1 to 15.

Let’s add a column for each power up to 15.

# Power of 1 is already there

for i in range(2,16):

colname = 'x_%d'%i # new var will be x_power

data[colname] = data['x']**i

data.head()

| x | y | x_2 | x_3 | x_4 | x_5 | x_6 | x_7 | x_8 | x_9 | x_10 | x_11 | x_12 | x_13 | x_14 | x_15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.047198 | 1.065763 | 1.096623 | 1.148381 | 1.202581 | 1.259340 | 1.318778 | 1.381021 | 1.446202 | 1.514459 | 1.585938 | 1.660790 | 1.739176 | 1.821260 | 1.907219 | 1.997235 |

| 1 | 1.117011 | 1.006086 | 1.247713 | 1.393709 | 1.556788 | 1.738948 | 1.942424 | 2.169709 | 2.423588 | 2.707173 | 3.023942 | 3.377775 | 3.773011 | 4.214494 | 4.707635 | 5.258479 |

| 2 | 1.186824 | 0.695374 | 1.408551 | 1.671702 | 1.984016 | 2.354677 | 2.794587 | 3.316683 | 3.936319 | 4.671717 | 5.544505 | 6.580351 | 7.809718 | 9.268760 | 11.000386 | 13.055521 |

| 3 | 1.256637 | 0.949799 | 1.579137 | 1.984402 | 2.493673 | 3.133642 | 3.937850 | 4.948448 | 6.218404 | 7.814277 | 9.819710 | 12.339811 | 15.506664 | 19.486248 | 24.487142 | 30.771450 |

| 4 | 1.326450 | 1.063496 | 1.759470 | 2.333850 | 3.095735 | 4.106339 | 5.446854 | 7.224981 | 9.583578 | 12.712139 | 16.862020 | 22.366630 | 29.668222 | 39.353420 | 52.200353 | 69.241170 |

Now that we have all the 15 powers, let’s make 15 different linear regression models with each model containing variables with powers of x from 1 to the particular model number.

For example, the feature set of model 8 will be {x, x_2, x_3, … , x_8}.

# Importing the Linear Regression model from scikit-learn

from sklearn.linear_model import LinearRegression

def linear_regression(data, power, models_to_plot):

#initialize predictors:

predictors=['x']

if power>=2:

predictors.extend(['x_%d'%i for i in range(2,power+1)])

# Fitting the model

linreg = LinearRegression(normalize=True)

linreg.fit(data[predictors],data['y'])

y_pred = linreg.predict(data[predictors])

# Checking if a plot is to be made for the entered power

if power in models_to_plot:

plt.subplot(models_to_plot[power])

plt.tight_layout()

plt.plot(data['x'],y_pred)

plt.plot(data['x'],data['y'],'.')

plt.title('Plot for power: %d'%power)

# Returning the result in pre-defined format

rss = sum((y_pred-data['y'])**2)

ret = [rss]

ret.extend([linreg.intercept_])

ret.extend(linreg.coef_)

return ret

Now, we can make all 15 models and compare the results.

# Initializing a DataFrame to store the results

col = ['rss','intercept'] + ['coef_x_%d'%i for i in range(1,16)]

ind = ['model_pow_%d'%i for i in range(1,16)]

coef_matrix_simple = pd.DataFrame(index=ind, columns=col)

# Defining the powers for which a plot is required

models_to_plot = {1:231,3:232,6:233,9:234,12:235,15:236}

# Iterating through all powers and assimilate results

for i in range(1,16):

coef_matrix_simple.iloc[i-1,0:i+2] = linear_regression(data, power=i, models_to_plot=models_to_plot)

As the model complexity increases, the models tend to fit even smaller deviations in the training data set.

This leads to overfitting.

# Displaying the analysis

pd.options.display.float_format = '{:,.2g}'.format

coef_matrix_simple

| rss | intercept | coef_x_1 | coef_x_2 | coef_x_3 | coef_x_4 | coef_x_5 | coef_x_6 | coef_x_7 | coef_x_8 | coef_x_9 | coef_x_10 | coef_x_11 | coef_x_12 | coef_x_13 | coef_x_14 | coef_x_15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| model_pow_1 | 3.3 | 2 | -0.62 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_2 | 3.3 | 1.9 | -0.58 | -0.006 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_3 | 1.1 | -1.1 | 3 | -1.3 | 0.14 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_4 | 1.1 | -0.27 | 1.7 | -0.53 | -0.036 | 0.014 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_5 | 1 | 3 | -5.1 | 4.7 | -1.9 | 0.33 | -0.021 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_6 | 0.99 | -2.8 | 9.5 | -9.7 | 5.2 | -1.6 | 0.23 | -0.014 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_7 | 0.93 | 19 | -56 | 69 | -45 | 17 | -3.5 | 0.4 | -0.019 | NaN | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_8 | 0.92 | 43 | -1.4e+02 | 1.8e+02 | -1.3e+02 | 58 | -15 | 2.4 | -0.21 | 0.0077 | NaN | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_9 | 0.87 | 1.7e+02 | -6.1e+02 | 9.6e+02 | -8.5e+02 | 4.6e+02 | -1.6e+02 | 37 | -5.2 | 0.42 | -0.015 | NaN | NaN | NaN | NaN | NaN | NaN |

| model_pow_10 | 0.87 | 1.4e+02 | -4.9e+02 | 7.3e+02 | -6e+02 | 2.9e+02 | -87 | 15 | -0.81 | -0.14 | 0.026 | -0.0013 | NaN | NaN | NaN | NaN | NaN |

| model_pow_11 | 0.87 | -75 | 5.1e+02 | -1.3e+03 | 1.9e+03 | -1.6e+03 | 9.1e+02 | -3.5e+02 | 91 | -16 | 1.8 | -0.12 | 0.0034 | NaN | NaN | NaN | NaN |

| model_pow_12 | 0.87 | -3.4e+02 | 1.9e+03 | -4.4e+03 | 6e+03 | -5.2e+03 | 3.1e+03 | -1.3e+03 | 3.8e+02 | -80 | 12 | -1.1 | 0.062 | -0.0016 | NaN | NaN | NaN |

| model_pow_13 | 0.86 | 3.2e+03 | -1.8e+04 | 4.5e+04 | -6.7e+04 | 6.6e+04 | -4.6e+04 | 2.3e+04 | -8.5e+03 | 2.3e+03 | -4.5e+02 | 62 | -5.7 | 0.31 | -0.0078 | NaN | NaN |

| model_pow_14 | 0.79 | 2.4e+04 | -1.4e+05 | 3.8e+05 | -6.1e+05 | 6.6e+05 | -5e+05 | 2.8e+05 | -1.2e+05 | 3.7e+04 | -8.5e+03 | 1.5e+03 | -1.8e+02 | 15 | -0.73 | 0.017 | NaN |

| model_pow_15 | 0.7 | -3.6e+04 | 2.4e+05 | -7.5e+05 | 1.4e+06 | -1.7e+06 | 1.5e+06 | -1e+06 | 5e+05 | -1.9e+05 | 5.4e+04 | -1.2e+04 | 1.9e+03 | -2.2e+02 | 17 | -0.81 | 0.018 |

It is clearly evident that the size of coefficients increase exponentially with increase in model complexity.

It means that we’re putting a lot of emphasis on that feature, i.e. the particular feature is a good predictor for the outcome.

When it becomes too large, the algorithm starts modelling intricate relations to estimate the output and ends up overfitting to the particular training data.

Ridge regression¶

A ridge regression performs L2 regularization’, i.e. it adds a factor of sum of squares of coefficients in the optimization objective.

Objective = RSS + alpha * (sum of square of coefficients)

The alpha parameter balances the amount of emphasis given to minimizing RSS vs minimizing sum of square of coefficients.

- alpha = 0, a simple linear regression;

- alpha = infinite, infinite weight on square of coefficients, anything less than zero will make the objective infinite; all coefficients zero;

- 0 < alpha < infinite, somewhere between 0 and 1 for simple linear regression.

One thing is for sure that any non-zero value would give values less than that of simple linear regression.

# Importing the Ridge Regression model from scikit-learn

from sklearn.linear_model import Ridge

def ridge_regression(data, predictors, alpha, models_to_plot={}):

# Fitting the model

ridgereg = Ridge(alpha=alpha,normalize=True)

ridgereg.fit(data[predictors],data['y'])

y_pred = ridgereg.predict(data[predictors])

# Checking if a plot is to be made for the entered alpha

if alpha in models_to_plot:

plt.subplot(models_to_plot[alpha])

plt.tight_layout()

plt.plot(data['x'],y_pred)

plt.plot(data['x'],data['y'],'.')

plt.title('Plot for alpha: %.3g'%alpha)

# Returning the result in pre-defined format

rss = sum((y_pred-data['y'])**2)

ret = [rss]

ret.extend([ridgereg.intercept_])

ret.extend(ridgereg.coef_)

return ret

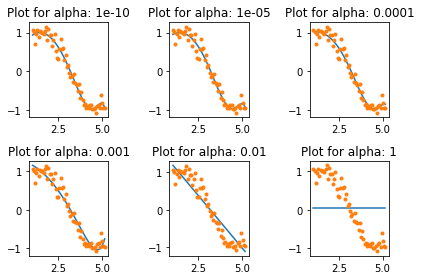

Let’s analyze the result of Ridge regression for 10 different values of alpha ranging from 1e-15 to 20.

Each of these 10 models will contain all the 15 variables and only the value of alpha would differ.

This is different from the simple linear regression case where each model had a subset of features.

# Initializing predictors to be set of 15 powers of x

predictors=['x']

predictors.extend(['x_%d'%i for i in range(2,16)])

# Setting the different values of alpha to be tested

alpha_ridge = [1e-15, 1e-10, 1e-8, 1e-4, 1e-3,1e-2, 1, 5, 10, 20]

# Initializing the dataframe for storing coefficients.

col = ['rss','intercept'] + ['coef_x_%d'%i for i in range(1,16)]

ind = ['alpha_%.2g'%alpha_ridge[i] for i in range(0,10)]

coef_matrix_ridge = pd.DataFrame(index=ind, columns=col)

models_to_plot = {1e-15:231, 1e-10:232, 1e-4:233, 1e-3:234, 1e-2:235, 5:236}

for i in range(10):

coef_matrix_ridge.iloc[i,] = ridge_regression(data, predictors, alpha_ridge[i], models_to_plot)

1 2 3 4 | |

As the value of alpha increases, the model complexity reduces.

Though higher values of alpha reduce overfitting, significantly high values can cause underfitting as well (alpha = 5, for example).

Thus alpha should be chosen wisely.

A widely accept technique is cross-validation, i.e. the value of alpha is iterated over a range of values and the one giving higher cross-validation score is chosen.

# Displaying the analysis

pd.options.display.float_format = '{:,.2g}'.format

coef_matrix_ridge

| rss | intercept | coef_x_1 | coef_x_2 | coef_x_3 | coef_x_4 | coef_x_5 | coef_x_6 | coef_x_7 | coef_x_8 | coef_x_9 | coef_x_10 | coef_x_11 | coef_x_12 | coef_x_13 | coef_x_14 | coef_x_15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha_1e-15 | 0.87 | 94 | -3e+02 | 3.7e+02 | -2.3e+02 | 61 | 2.6 | -5.1 | 0.62 | 0.17 | -0.03 | -0.0052 | 0.001 | 0.00017 | -4.6e-05 | 3.1e-06 | -3.8e-08 |

| alpha_1e-10 | 0.92 | 11 | -29 | 31 | -15 | 2.9 | 0.17 | -0.091 | -0.011 | 0.002 | 0.00064 | 2.4e-05 | -2e-05 | -4.2e-06 | 2.2e-07 | 2.3e-07 | -2.3e-08 |

| alpha_1e-08 | 0.95 | 1.3 | -1.5 | 1.7 | -0.68 | 0.039 | 0.016 | 0.00016 | -0.00036 | -5.4e-05 | -2.9e-07 | 1.1e-06 | 1.9e-07 | 2e-08 | 3.9e-09 | 8.2e-10 | -4.6e-10 |

| alpha_0.0001 | 0.96 | 0.56 | 0.55 | -0.13 | -0.026 | -0.0028 | -0.00011 | 4.1e-05 | 1.5e-05 | 3.7e-06 | 7.4e-07 | 1.3e-07 | 1.9e-08 | 1.9e-09 | -1.3e-10 | -1.5e-10 | -6.2e-11 |

| alpha_0.001 | 1 | 0.82 | 0.31 | -0.087 | -0.02 | -0.0028 | -0.00022 | 1.8e-05 | 1.2e-05 | 3.4e-06 | 7.3e-07 | 1.3e-07 | 1.9e-08 | 1.7e-09 | -1.5e-10 | -1.4e-10 | -5.2e-11 |

| alpha_0.01 | 1.4 | 1.3 | -0.088 | -0.052 | -0.01 | -0.0014 | -0.00013 | 7.2e-07 | 4.1e-06 | 1.3e-06 | 3e-07 | 5.6e-08 | 9e-09 | 1.1e-09 | 4.3e-11 | -3.1e-11 | -1.5e-11 |

| alpha_1 | 5.6 | 0.97 | -0.14 | -0.019 | -0.003 | -0.00047 | -7e-05 | -9.9e-06 | -1.3e-06 | -1.4e-07 | -9.3e-09 | 1.3e-09 | 7.8e-10 | 2.4e-10 | 6.2e-11 | 1.4e-11 | 3.2e-12 |

| alpha_5 | 14 | 0.55 | -0.059 | -0.0085 | -0.0014 | -0.00024 | -4.1e-05 | -6.9e-06 | -1.1e-06 | -1.9e-07 | -3.1e-08 | -5.1e-09 | -8.2e-10 | -1.3e-10 | -2e-11 | -3e-12 | -4.2e-13 |

| alpha_10 | 18 | 0.4 | -0.037 | -0.0055 | -0.00095 | -0.00017 | -3e-05 | -5.2e-06 | -9.2e-07 | -1.6e-07 | -2.9e-08 | -5.1e-09 | -9.1e-10 | -1.6e-10 | -2.9e-11 | -5.1e-12 | -9.1e-13 |

| alpha_20 | 23 | 0.28 | -0.022 | -0.0034 | -0.0006 | -0.00011 | -2e-05 | -3.6e-06 | -6.6e-07 | -1.2e-07 | -2.2e-08 | -4e-09 | -7.5e-10 | -1.4e-10 | -2.5e-11 | -4.7e-12 | -8.7e-13 |

The RSS increases with increases in alpha, this model complexity reduces.

An alpha as small as 1e-15 gives us significant reduction in magnitude of coefficients.

High alpha values can lead to significant underfitting.

Note the rapid increase in RSS for values of alpha greater than 1.

Though the coefficients are very very small, they are NOT zero.

Let’s reconfirm the same by determining the number of zeros in each row of the coefficients dataset.

coef_matrix_ridge.apply(lambda x: sum(x.values==0),axis=1)

1 2 3 4 5 6 7 8 9 10 11 | |

Lasso regression¶

LASSO stands for Least Absolute Shrinkage and Selection Operator.

Lasso regression performs L1 regularization, i.e. it adds a factor of sum of absolute value of coefficients in the optimization objective.

Objective = RSS + alpha * (sum of absolute value of coefficients)

The alpha works similar to that of ridge and provides a trade-off between balancing RSS and magnitude of coefficients.

- alpha = 0, a simple linear regression;

- alpha = infinite, infinite weight on square of coefficients, anything less than zero will make the objective infinite; all coefficients zero.

- 0 < alpha < infinite, somewhere between 0 and 1 for simple linear regression.

It appears to be very similar to the Ridge regression.

# Importing the Ridge Regression model from scikit-learn

from sklearn.linear_model import Lasso

def lasso_regression(data, predictors, alpha, models_to_plot={}):

#Fit the model

lassoreg = Lasso(alpha=alpha,normalize=True, max_iter=1e5)

lassoreg.fit(data[predictors],data['y'])

y_pred = lassoreg.predict(data[predictors])

#Check if a plot is to be made for the entered alpha

if alpha in models_to_plot:

plt.subplot(models_to_plot[alpha])

plt.tight_layout()

plt.plot(data['x'],y_pred)

plt.plot(data['x'],data['y'],'.')

plt.title('Plot for alpha: %.3g'%alpha)

#Return the result in pre-defined format

rss = sum((y_pred-data['y'])**2)

ret = [rss]

ret.extend([lassoreg.intercept_])

ret.extend(lassoreg.coef_)

return ret

The additional parameters defined in Lasso function max_iter is the maximum number of iterations for which we want the model to run if it doesn’t converge before.

This exists for Ridge regression as well but setting this to a higher than default value was required in this case.

# Initializing predictors to all 15 powers of x

predictors=['x']

predictors.extend(['x_%d'%i for i in range(2,16)])

# Defining the alpha values to test

alpha_lasso = [1e-15, 1e-10, 1e-8, 1e-5,1e-4, 1e-3,1e-2, 1, 5, 10]

# Initializing the dataframe to store coefficients

col = ['rss','intercept'] + ['coef_x_%d'%i for i in range(1,16)]

ind = ['alpha_%.2g'%alpha_lasso[i] for i in range(0,10)]

coef_matrix_lasso = pd.DataFrame(index=ind, columns=col)

# Defining the models to plot

models_to_plot = {1e-10:231, 1e-5:232,1e-4:233, 1e-3:234, 1e-2:235, 1:236}

# Iterating over the 10 alpha values:

for i in range(10):

coef_matrix_lasso.iloc[i,] = lasso_regression(data, predictors, alpha_lasso[i], models_to_plot);

1 2 3 4 5 6 | |

This again tells us that the model complexity decreases with increases in the values of alpha.

But notice the straight line at alpha=1.

# Displaying the analysis

pd.options.display.float_format = '{:,.2g}'.format

coef_matrix_lasso

| rss | intercept | coef_x_1 | coef_x_2 | coef_x_3 | coef_x_4 | coef_x_5 | coef_x_6 | coef_x_7 | coef_x_8 | coef_x_9 | coef_x_10 | coef_x_11 | coef_x_12 | coef_x_13 | coef_x_14 | coef_x_15 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| alpha_1e-15 | 0.96 | 0.22 | 1.1 | -0.37 | 0.00089 | 0.0016 | -0.00012 | -6.4e-05 | -6.3e-06 | 1.4e-06 | 7.8e-07 | 2.1e-07 | 4e-08 | 5.4e-09 | 1.8e-10 | -2e-10 | -9.2e-11 |

| alpha_1e-10 | 0.96 | 0.22 | 1.1 | -0.37 | 0.00088 | 0.0016 | -0.00012 | -6.4e-05 | -6.3e-06 | 1.4e-06 | 7.8e-07 | 2.1e-07 | 4e-08 | 5.4e-09 | 1.8e-10 | -2e-10 | -9.2e-11 |

| alpha_1e-08 | 0.96 | 0.22 | 1.1 | -0.37 | 0.00077 | 0.0016 | -0.00011 | -6.4e-05 | -6.3e-06 | 1.4e-06 | 7.8e-07 | 2.1e-07 | 4e-08 | 5.3e-09 | 2e-10 | -1.9e-10 | -9.3e-11 |

| alpha_1e-05 | 0.96 | 0.5 | 0.6 | -0.13 | -0.038 | -0 | 0 | 0 | 0 | 7.7e-06 | 1e-06 | 7.7e-08 | 0 | 0 | 0 | -0 | -7e-11 |

| alpha_0.0001 | 1 | 0.9 | 0.17 | -0 | -0.048 | -0 | -0 | 0 | 0 | 9.5e-06 | 5.1e-07 | 0 | 0 | 0 | -0 | -0 | -4.4e-11 |

| alpha_0.001 | 1.7 | 1.3 | -0 | -0.13 | -0 | -0 | -0 | 0 | 0 | 0 | 0 | 0 | 1.5e-08 | 7.5e-10 | 0 | 0 | 0 |

| alpha_0.01 | 3.6 | 1.8 | -0.55 | -0.00056 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | 0 | 0 | 0 | 0 | 0 | 0 |

| alpha_1 | 37 | 0.038 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 |

| alpha_5 | 37 | 0.038 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 |

| alpha_10 | 37 | 0.038 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 | -0 |

Apart from the expected inference of higher RSS for higher alphas, we can see the following:

- For the same values of alpha, the coefficients of lasso regression are much smaller as compared to that of ridge regression (compare row 1 of the 2 tables);

- For the same alpha, lasso has higher RSS (poorer fit) as compared to ridge regression;

- Many of the coefficients are zero even for very small values of alpha.

The real difference from ridge is coming out in the last inference.

Let’s check the number of coefficients which are zero in each model using the following code.

coef_matrix_lasso.apply(lambda x: sum(x.values==0),axis=1)

1 2 3 4 5 6 7 8 9 10 11 | |

For the same values of alpha, the coefficients of lasso regression are much smaller as compared to that of ridge regression (compare row 1 of the 2 tables).

For the same alpha, lasso has higher RSS (poorer fit) as compared to ridge regression.

Many of the coefficients are zero even for very small values of alpha.

The real difference from ridge is coming out in the last inference.

We can observe that even for a small value of alpha, a significant number of coefficients are zero.

This also explains the horizontal line fit for alpha=1 in the lasso plots, it’s just a baseline model!

This phenomenon of most of the coefficients being zero is called ‘sparsity’. Although lasso performs feature selection, this level of sparsity is achieved in special cases only which we’ll discuss towards the end.

Key Difference¶

- Ridge: it includes all (or none) of the features in the model. Thus, the major advantage of ridge regression is coefficient shrinkage and reducing model complexity.

- Lasso: along with shrinking coefficients, lasso performs feature selection as well. (Remember the ‘selection’ in the lasso full-form?) As we observed earlier, some of the coefficients become exactly zero, which is equivalent to the particular feature being excluded from the model.

Traditionally, techniques like stepwise regression were used to perform feature selection and make parsimonious models.

But with advancements in Machine Learning, ridge and lasso regression provide very good alternatives as they give much better output, require fewer tuning parameters and can be automated to a large extent.

Typical Use Cases¶

- Ridge: it is majorly used to prevent overfitting. Since it includes all the features, it is not very useful in case of an exorbitantly high number of features, say in millions, as it will pose computational challenges.

- Lasso: since it provides sparse solutions, it is generally the model of choice (or some variant of this concept) for modelling cases where the number of features is in millions or more. In such a case, getting a sparse solution is of great computational advantage as the features with zero coefficients can simply be ignored.

It’s not hard to see why the stepwise selection techniques become practically very cumbersome to implement in high dimensionality cases.

Thus, lasso provides a significant advantage.

Presence of Highly Correlated Features¶

- Ridge: it generally works well even in presence of highly correlated features as it will include all of them in the model but the coefficients will be distributed among them depending on the correlation.

- Lasso: it arbitrarily selects any one feature among the highly correlated ones and reduced the coefficients of the rest to zero. Also, the chosen variable changes randomly with changes in model parameters. This generally doesn’t work that well as compared to ridge regression.

This disadvantage of lasso can be observed in the example we discussed above.

Since we used a polynomial regression, the variables were highly correlated. (Not sure why? Check the output of data.corr()).

Thus, we saw that even small values of alpha were giving significant sparsity (i.e. high numbers of coefficients as zero).

Along with Ridge and Lasso, Elastic Net is another useful technique which combines both L1 and L2 regularization. It can be used to balance out the pros and cons of ridge and lasso regression. I encourage you to explore it further.

ElasticNet Regression¶

ElasticNet is hybrid of Lasso and Ridge Regression techniques.

It is trained with L1 and L2 prior as regularizers.

Elastic-net is useful when there are multiple features which are correlated.

Lasso is likely to pick one of these at random, while elastic-net is likely to pick both.

Objective = RSS + alpha1 * (sum of square of coefficients) + alpha2 * (sum of absolute value of coefficients)

Elastic-Net to inherit some of Ridge’s stability under rotation.

It encourages group effect in case of highly correlated variables.

There are no limitations on the number of selected variables.

It can suffer from double shrinkage.

Data reduction techniques¶

Principal Component Analysis (or PCA) uses linear algebra to transform the dataset into a compressed form.

Generally, this is called a data reduction technique.

A property of PCA is that you can choose the number of dimensions or principal component in the transformed result.

# Importing your necessary module

from sklearn.decomposition import PCA

# Feature extraction

pca = PCA(n_components = 3)

fit = pca.fit(X)

# Summarizing components

print("Explained Variance: %s" % (fit.explained_variance_ratio_))

1 | |

print(fit.components_)

1 2 3 4 5 6 | |

The transformed dataset (3 principal components) bare little resemblance to the source data.