Introduction to data.world

Foreword

Code snippets and excerpts from the course. Python 3. From DataCamp.

Introduction¶

Open data is at the heart of data.world.

Open an account, log in, find a dataset, download it or copy URL or integrate data.world with Python/R/Tableau by installing dw in the terminal. In Python for example.

pip install datadotworld[pandas] or conda install datadotworld-py.

then,

dw configure.

Pull the API token from the account and enter it.

There are similar procedures for R and Tableau. Everything is documented on the website (when trying to download any dataset, more options appear for Python/R/Tableau).

Option 1: use the data.world Jupyter Notebook template as a way to jump-start a project with Python.

Option 2:

%pylab inline

import pandas as pd

import os

# Import the datadotworld module as dw

import datadotworld as dw

1 | |

Working with Datasets¶

Import a Dataset¶

Once the module is loaded, there are two ways to import a dataset.

# First

# Import the city council votes dataset

dataset = dw.load_dataset('stephen-hoover/chicago-city-council-votes')

The dataset is now in the cache.

# Second

# Import the city council votes dataset

dataset = dw.load_dataset('https://data.world/stephen-hoover/chicago-city-council-votes')

Working with Datasets¶

From the beginning.

# Import the datadotworld module as dw

import datadotworld as dw

# Import the Pretty Print module

import pprint as pp

# Import the city council votes dataset

dataset = dw.load_dataset('https://data.world/stephen-hoover/chicago-city-council-votes')

# Use describe()

# Review all the metadata that is downloaded with the dataset

# Print it to the screen using pp.pprint()

pp.pprint(dataset.describe())

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 | |

# Use describe() again

# Get a description of a specific resource: alderman_votes

# Print it to the screen

pp.pprint(dataset.describe('alderman_votes'))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

Reading the metadata¶

All fields begin with {. The alderman_votes variable has 5 fields.

Accessing the data¶

We have access to three properties: raw_data, tables, and dataframes. Each of these returns a dictionary of values, just in different formats: bytes, list and pandas.DataFrame objects.

# Use the dataframes property

# Assign the alderman_votes table to the variable votes_dataframe

votes_dataframe = dataset.dataframes['alderman_votes']

# Use the pandas shape property

# Get rows/columns size for the `votes_dataframe` dataframe

pp.pprint(votes_dataframe.shape)

1 | |

# Use the pandas head function

# Print the first 3 rows of the `votes_dataframe` dataframe

pp.pprint(votes_dataframe.head(3))

1 2 3 4 | |

Working with multiple datasets¶

import datadotworld as dw

# Loaded two datasets

int_dataset = dw.load_dataset('https://data.world/jonloyens/intermediate-data-world')

fipsCodes_dataset = dw.load_dataset('https://data.world/uscensusbureau/fips-state-codes')

# Create two dataframes:

# police_shootings from the 'fatal_police_shootings_data' table of int_dataset and

# state_abbrvs, from the 'statesfipscodes' table of fipsCodes_dataset

police_shootings = int_dataset.dataframes['fatal_police_shootings_data']

state_abbrvs = fipsCodes_dataset.dataframes['statesfipscodes']

# Merge the two datasets together

# on the state and stusab fields

# Assign to a merged_dataframe variable

merged_dataframe = police_shootings.merge(state_abbrvs,

how = 'left',

left_on = 'state',

right_on='stusab')

# Add a 'citystate' column to the merged_dataframe dataframe,

# populating it with the concatinated values from the 'city' and 'state_name' columns,

# separated by ', '

merged_dataframe["citystate"] = merged_dataframe["city"] + \

", " + merged_dataframe["state_name"]

## Print head of merged_dataframe

pp.pprint(merged_dataframe.head(5))

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | |

Querying with data.world¶

Another way to pull data in from data.world is to use the query() method to use SQL or SPARQL to query one or more datasets. Check out the full dwSQL documentation.

SQL: Querying a table¶

import datadotworld as dw

# Run a SQL query

# Select all rows from the `unhcr_all` table where `Year` equals 2010

# Assign the query string to a `sql_query` variable

sql_query = "SELECT * FROM `unhcr_all` WHERE Year = 2010"

# Use the `query` method of the datadotworld module

# Run the `sql_query`

# Assign the results to a `query2010` variable

query2010 = dw.query('https://data.world/agriculture/national-farmers-markets', sql_query)

# Use the dataframe property of the resulting query

# Create a dataframe variable named `unhcr2010`

unhcr2010 = query2010.dataframe

# Print the first 5 rows using the head method

pp.pprint(unhcr2010.head(5))

SQL: Query multiple tables (join)¶

Write queries against multiple tables within a single dataset or across many datasets!

import datadotworld as dw

# Run a SQL query

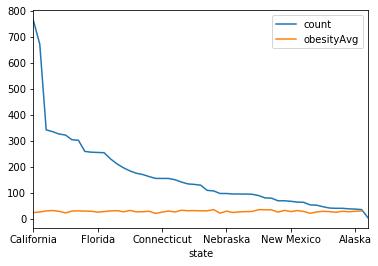

# Select state, the count of farmers markets (fmid),

# and average obesity rate

# from agriculture.`national-farmers-markets`.export,

# LEFT JOINED against health.`obesity-by-state-2014`

# adult_obese on state and location

sql_query = "SELECT state, count(fmid) as count, Avg(obesity.Value) as obesityAvg FROM Export LEFT JOIN health.`obesity-by-state-2014`.`adult_obese` as obesity ON state = obesity.location GROUP BY state ORDER BY count desc"

# Use the `query` method of the datadotworld module

# Run the `sql_query` against the `https://data.world/agriculture/national-farmers-markets` dataset

# Assign the results to a `queryResults` variable

queryResults = dw.query('https://data.world/agriculture/national-farmers-markets', \

sql_query)

# Use the dataframes property of the resulting query

# Create a dataframe variable named `stateStats`

stateStats = queryResults.dataframe

import matplotlib.pyplot as plt

# Plot the stateStats results using state as the x-axis

stateStats.plot(x='state')

plt.show()

SPARQL: Querying linked data¶

Behind the scenes, data.world is converting all tabular data files into linked data using Semantic Web technologies. This allows to upload any tabular format, like xlsx, csv, tsv or json, and instantly be able to query and join them without issue. SQL is great for this, but SPARQL - which is the query language for linked data - can be more robust and flexible than SQL, allowing for more complex queries.

Check out the full SPARQL documentation.

import datadotworld as dw

# A SPARQL query assigned to the `sparql_query` variable:

sparql_query = "PREFIX GOT: <https://tutorial.linked.data.world/d/sparqltutorial/> SELECT ?FName ?LName WHERE {?person GOT:col-got-house \"Stark\" . ?person GOT:col-got-fname ?FName . ?person GOT:col-got-lname ?LName .}"

# Use the pre-defined SPARQL query

# Query dataset http://data.world/tutorial/sparqltutorial and

# return the results to a queryResults variable

queryResults = dw.query('http://data.world/tutorial/sparqltutorial', \

sparql_query, query_type='sparql')

# Use the dataframe property of the resulting query

# Create a dataframe variable named `houseStark`

houseStark = queryResults.dataframe

# Use pp.pprint() to print the dataframe to the screen

pp.pprint(houseStark)

1 2 3 4 5 6 7 | |

Wrap up¶

import datadotworld as dw

# Import the sys module

import sys

# Import a dataset

refugee_dataset = dw.load_dataset('nrippner/refugee-host-nations')

# Get the size of the dataset:

sys.getsizeof(refugee_dataset)

1 | |

# List all of the data files:

dataframes = refugee_dataset.dataframes

for df in dataframes:

pp.pprint(df)

1 2 3 4 5 6 7 | |

# Print all of the files in a dataset:

resources = refugee_dataset.describe()['resources']

pp.pprint('name:')

for r in resources:

pp.pprint(r['name'])

pp.pprint('\ntype of file:')

for r in resources:

pp.pprint(r['format'])

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 | |

Advanced SDK Functionality¶

The data.world Python SDK includes a variety of API wrappers, available via the ApiClient class, to create, replace, update, and delete a dataset. In this section, we walk through a few common tasks:

- Use

api_client()to get an instance of theApiClient. - Create a dataset.

- Add a file from a data frame: we write to a local csv and the upload the file.

- Add a file from a source URL: this is an easy way to add external data to the dataset and keep it up to date. We use a file from GitHub as an example, but we can use any URL source that points to a file.

- Sync the dataset: this simple call reloads any files with a source URL, to ensure the latest version.

- Update the dataset: after creating a dataset, use

update_datasetto change attiributes like description, summary or tags.

Use help(api_client) to learn more about each available function or see the full data.world API documentation.

Open the API¶

import datadotworld as dw

# Create an instance of the ApiClient using `api_client()`

api_client = dw.api_client()

# See api_client documentation

help(api_client)

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 182 183 184 185 186 187 188 189 190 191 192 193 194 195 196 197 198 199 200 201 202 203 204 205 206 207 208 209 210 211 212 213 214 215 216 217 218 219 220 221 222 223 224 225 226 227 228 229 230 231 232 233 234 235 236 237 238 239 240 241 242 243 244 245 246 247 248 249 250 251 252 253 254 255 256 257 258 259 260 261 262 263 264 265 266 267 268 269 270 271 272 273 274 275 276 277 278 | |

Create a dataset using create_dataset method¶

api_client.create_dataset(owner_id="<YOUR_USERNAME>", title="<DATASET_TITLE>", visibility='PRIVATE').

import datadotworld as dw

# Replace the < > items with your username and desired dataset title

# Visibility can be changed to 'OPEN' if you choose

api_client.create_dataset(owner_id="ugo", title="intermediate-data-world", visibility='OPEN')

1 | |

Write a data frame to a local file and upload to dataset¶

Add file to the dataset using upload_files(). Replace the < > items with the dataset values.

api_client.upload_files('<YOUR_USERNAME>/<DATASET_TITLE>',['police_shootings.csv']).

import os

cwd = os.getcwd()

#print(cwd)

import datadotworld as dw

# Create a dataframe

police_shootings = dw.load_dataset('https://data.world/jonloyens/intermediate-data-world').dataframes['fatal_police_shootings_data']

# Write dataframe to local csv using pandas to_csv() method

# in the current working directory (cwd)

police_shootings.to_csv('police_shootings.csv', encoding='utf-8')

Check the current working directory.

Update dataset¶

Add a file from an external source URL. In this example we use GitHub.

Replace the < > items with the dataset values

api_client.add_files_via_url('<YOUR_USERNAME>/<DATASET_TITLE>',{'shootings_of_police.csv': 'https://github.com/fivethirtyeight/data/blob/master/police-deaths/all_data.csv’}).

For files added with add_files_via_url, fetch the latest version using the sync() method:

api_client.sync_files('<YOUR_USERNAME>/<DATASET_TITLE>').

Use the update_dataset() method to update the metadata after dataset creation:

api_client.update_dataset('<YOUR_USERNAME>/<DATASET_TITLE>', description='Dataset created to test out the python SDK functionality.', tags=['test', 'datacamp']).